Technical SEO: Optimizing Your Website for Search Engines

Why is Technical SEO Important?

Technical SEO is essential to ensure your website appears in search results and gains traffic, revenue and business growth. It impacts your website's performance on Google and helps to improve user experience.

Common Tasks Associated with Technical SEO:

To optimise your website for technical SEO, some common tasks include:

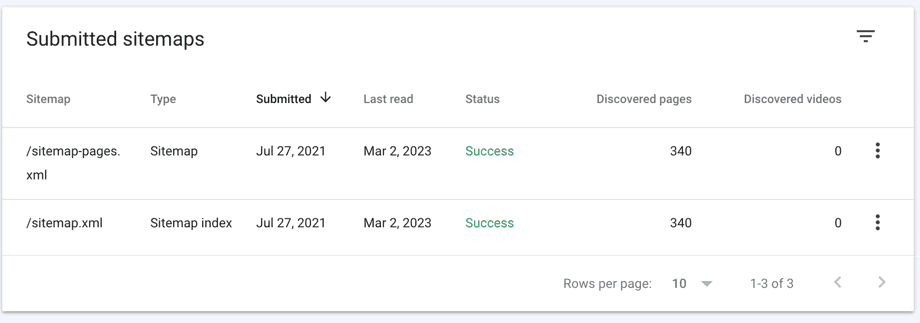

- Submitting your sitemap to Google

- Creating an SEO-friendly site structure

- Improving your website's speed

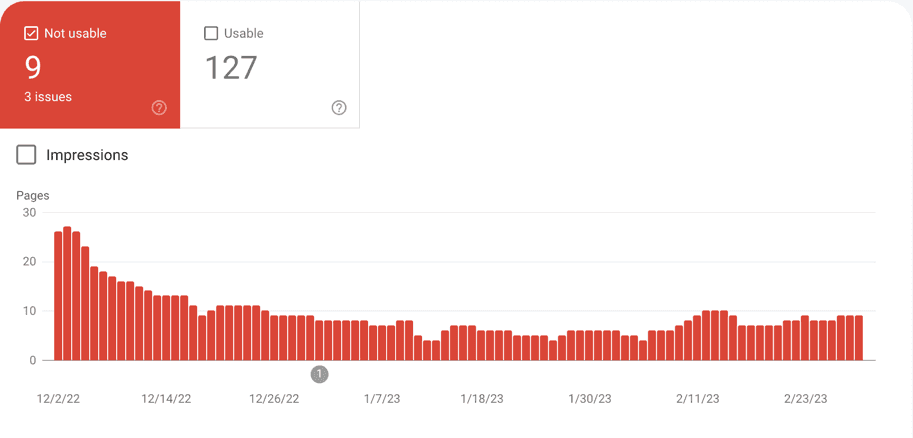

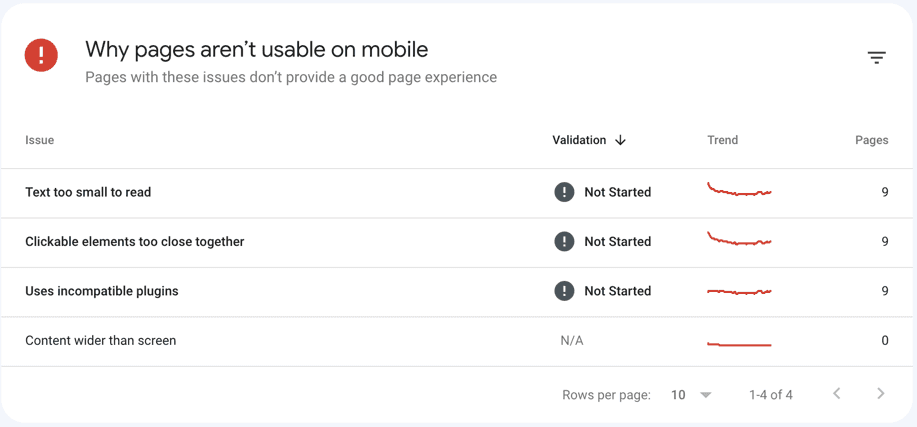

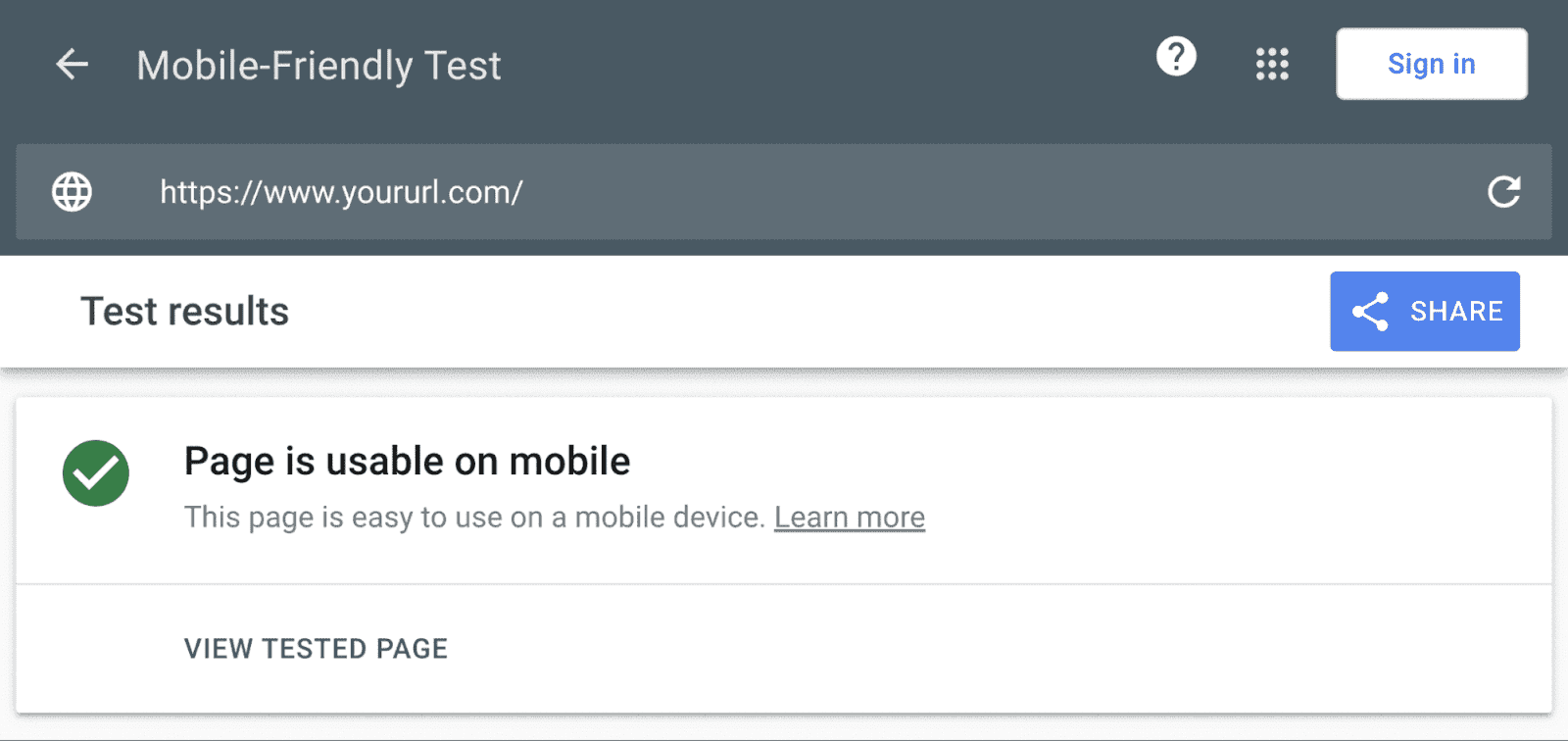

- Making your website mobile-friendly

- Finding and fixing duplicate content issues

And much more

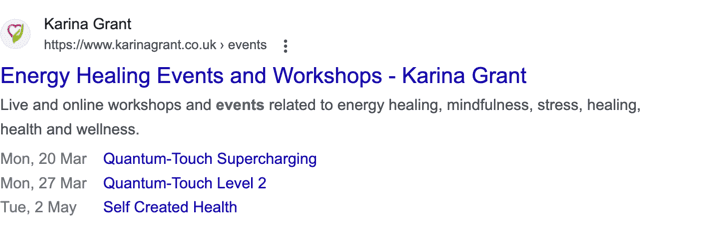

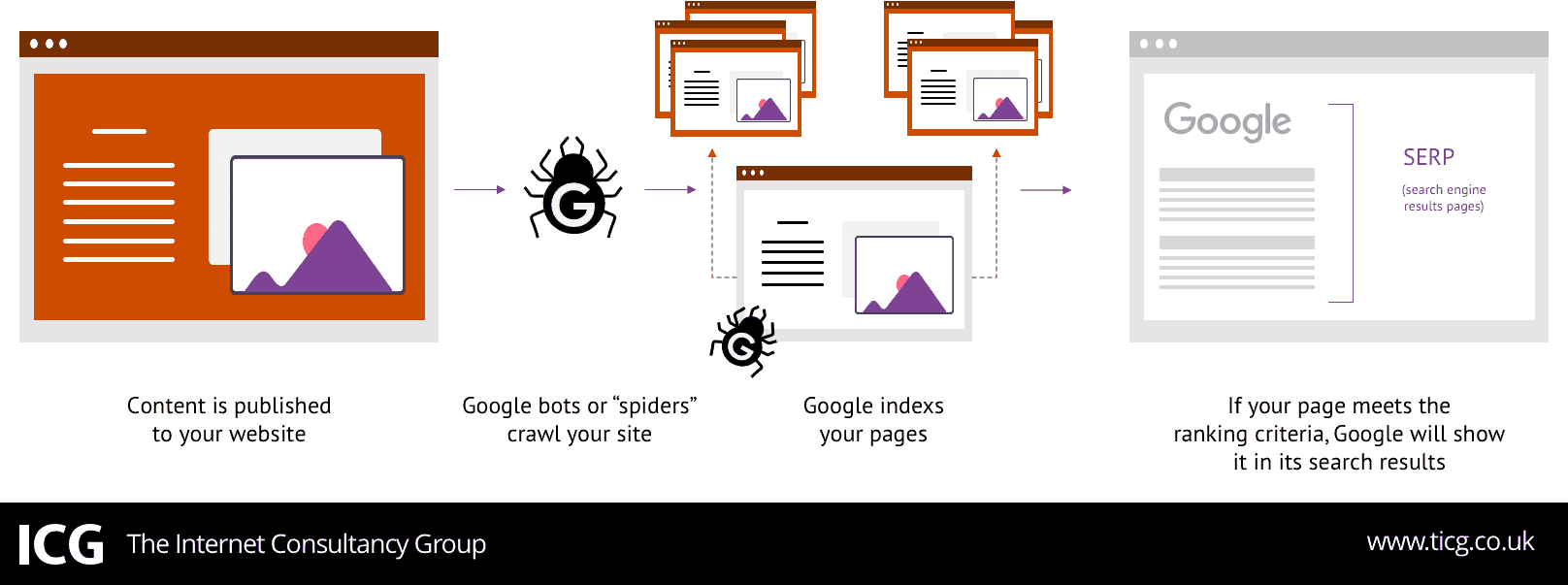

Understanding Crawling:

To optimise your website for technical SEO, it is crucial to understand crawling. Crawling is the process by which search engines discover and index web pages.

Technical SEO is essential to improve search engine performance and user experience. By optimising your website's technical aspects, you can increase your website's visibility, gain more traffic and ultimately grow your business.

Crawling happens when search engines follow links on pages they already know about to find pages they haven't seen before.

For example, every time we publish new blog posts, we add them to our blog archive page.

So the next time a search engine like Google crawls our blog page, it sees the recently added links to new blog posts.

And that's one of the ways Google discovers our new blog posts.

If you want your pages to show up in search results, you first need to ensure that they are accessible to search engines.

There are a few ways to do this:

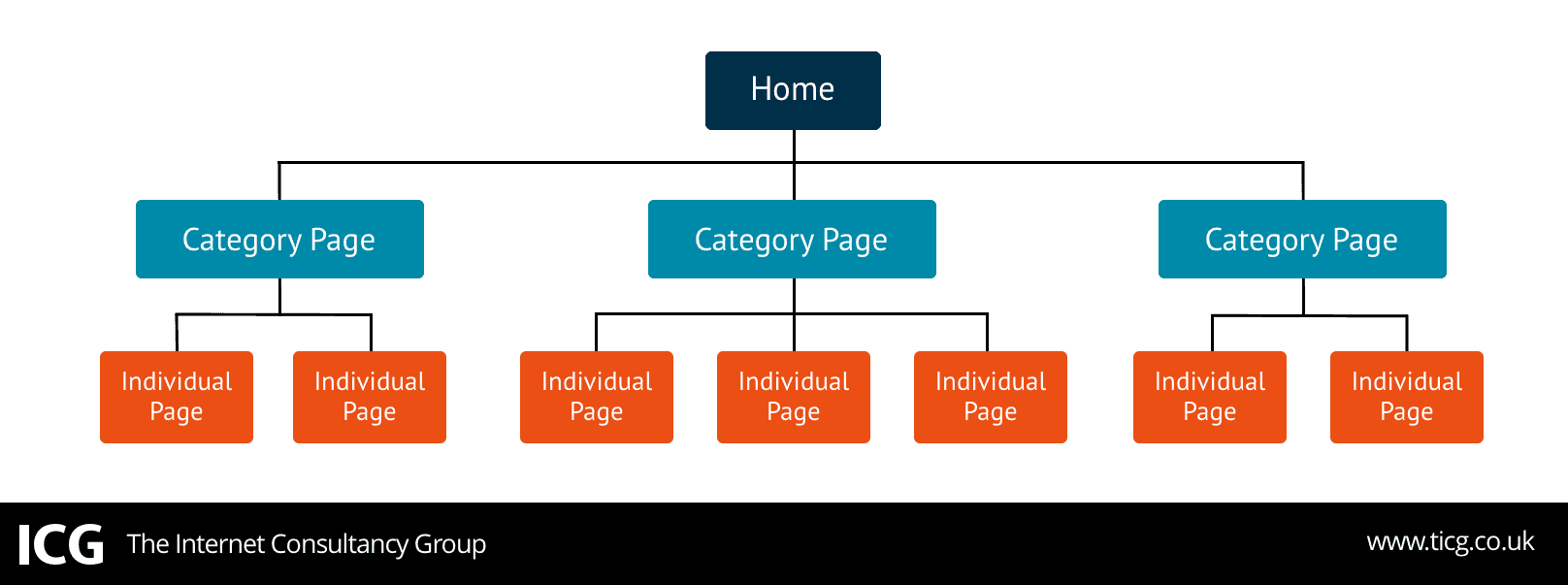

Create SEO-Friendly Site Architecture

Site architecture, also called site structure, is the way pages are linked together within your site.

An effective site structure organizes pages in a way that helps crawlers find your website content quickly and easily.

So when structuring your site, ensure all the pages are just a few clicks away from your homepage.

Like so:

In the site structure above, all the pages are organized in a logical hierarchy.

The homepage links to category pages. And then, category pages link to individual subpages on the site.

This structure also reduces the number of orphan pages.

Orphan pages are pages with no internal links pointing to them, making it difficult (or sometimes impossible) for crawlers and users to find those pages.